I had a look at trying to re-create something I'd seen a while ago on this demo by Hans Godard, essentially hi-jacking Maya's polyModifiers with a componentList. It's something I've been exploring with a colleague over the last week. Lots of fun to be had. I'm sure there's lots of ways of chaining the modifiers to produce cool effects.

Wednesday, 12 April 2017

Sunday, 2 April 2017

Texture Deformer

I had a look at combining Maya's 2D procedural textures and a custom deformer this week. It turns out you can produce some pretty sweet effects, which I haven't really delved into too much so far since there is a ton of layering and cool things you can do with the procedural textures, including animating them.

I used MFnMesh.anyIntersection to test for collision from rays fired from the points of the deforming mesh, in the direction of the reverse normal of the plane. A colleague pointed me to MDynamicsUtil.evalDynamics2dTexture which gave me the colour information I needed, after using MFnMesh.getUVAtPoint to retrieve the UV values at the collision point returned from MFnMesh.anyIntersection.

All in all, it's pretty damn speedy considering all the calculations it's performing. I added in the blend falloff functionality from the inflate deformer for some additional deformation options.

I used MFnMesh.anyIntersection to test for collision from rays fired from the points of the deforming mesh, in the direction of the reverse normal of the plane. A colleague pointed me to MDynamicsUtil.evalDynamics2dTexture which gave me the colour information I needed, after using MFnMesh.getUVAtPoint to retrieve the UV values at the collision point returned from MFnMesh.anyIntersection.

All in all, it's pretty damn speedy considering all the calculations it's performing. I added in the blend falloff functionality from the inflate deformer for some additional deformation options.

Thursday, 23 March 2017

Inflate Deformer

I was discussing with a colleague recently on how to create a deformer that simulates a foot stepping into snow.

I decided to give it a go myself and the results aren't too bad. Most of the work was already done with the collision deformer I put together previously, the most difficult part was getting the mesh to inflate back to it's default shape. There's a great demo by Marco D'Ambros on this sort of effect.

I decided to give it a go myself and the results aren't too bad. Most of the work was already done with the collision deformer I put together previously, the most difficult part was getting the mesh to inflate back to it's default shape. There's a great demo by Marco D'Ambros on this sort of effect.

Monday, 2 January 2017

Pose Reader / Custom Locator

I had a bit of spare time over the holidays so I set myself a side project to work on when I got a spare moment.

I decided to try my luck with a custom locator as I've never built one before but I wanted to make it useful in some way so I went for a pose-reader! Yea..very original I know. But it's always a staple in any riggers toolkit (whether as a plugin or just straight out the box Maya nodes).

This video by Marco Giordano helped me get my maths straight on the subject and I had to read up a bit about spherical co-ordinates to build the cone points at the correct place given some angle.

Unfortunately it's legacy viewport only. The scripted examples (Python API) for custom locators in the devkit seem to be..well...a bit of a mess. So I skipped VP 2.0 support. I'm lazy.

I decided to try my luck with a custom locator as I've never built one before but I wanted to make it useful in some way so I went for a pose-reader! Yea..very original I know. But it's always a staple in any riggers toolkit (whether as a plugin or just straight out the box Maya nodes).

This video by Marco Giordano helped me get my maths straight on the subject and I had to read up a bit about spherical co-ordinates to build the cone points at the correct place given some angle.

Unfortunately it's legacy viewport only. The scripted examples (Python API) for custom locators in the devkit seem to be..well...a bit of a mess. So I skipped VP 2.0 support. I'm lazy.

Labels:

api,

autodesk,

mpxlocatornode,

pose reader,

python api,

rigging,

vectors

Wednesday, 21 December 2016

Matrix fun!

Here's a few snippets I wrote when learning how to interact with matrices via Maya's Python API.

Note: You can get the local and world matrices directly from attributes on a dag node. You can also use the xform command.

In the example image, pCube1 is a child of pSphere1. If the position of both objects in the scene are random, we can find the local and world matrices for pCube1 and the world-space and local-space positions (translate, rotate, scale) of pCube1 with the example bits of code below. The channel box will only give us local or relative values to the objects parent.

We could just call the inclusiveMatrix() method on a dagPath object to retrieve the world matrix (as is done below) but lets just say that for umm...some unknown reason you only had the local matrix to work with.

Note: You can get the local and world matrices directly from attributes on a dag node. You can also use the xform command.

In the example image, pCube1 is a child of pSphere1. If the position of both objects in the scene are random, we can find the local and world matrices for pCube1 and the world-space and local-space positions (translate, rotate, scale) of pCube1 with the example bits of code below. The channel box will only give us local or relative values to the objects parent.

Finding a world matrix from a local matrix

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 | import maya.OpenMaya as om import math transformFn = om.MFnTransform() # Create empty transform function set mSel = om.MSelectionList() mSel.add('pSphere1') mSel.add('pCube1') dagPath = om.MDagPath() mSel.getDagPath(0, dagPath) # Get the dagPath for pSphere1 transformFn.setObject(dagPath) # Set the function set to operate on pSphere1 dagPath transformationMatrix = transformFn.transformation() # Get a MTransformationMatrix object matrixA = transformationMatrix.asMatrix() # Get a MMatrix object representing to local matrix of pSphere1 mSel.getDagPath(1, dagPath) transformFn.setObject(dagPath) transformationMatrix = transformFn.transformation() matrixB = transformationMatrix.asMatrix() # Get a MMatrix object representing to local matrix of pCube1 # Multiply local matrices up from child to parent worldMatrixB = matrixB * matrixA translation = om.MTransformationMatrix(worldMatrixB).getTranslation(om.MSpace.kWorld) # <-- Not sure on this parameter. All I care about is the 4th row in the matrix. rotation = om.MTransformationMatrix(worldMatrixB).eulerRotation() print '\n' print 'pCube1.translate ->', translation.x, translation.y, translation.z print 'pCube1.rotate ->', math.degrees(rotation.x), math.degrees(rotation.y), math.degrees(rotation.z) |

We could just call the inclusiveMatrix() method on a dagPath object to retrieve the world matrix (as is done below) but lets just say that for umm...some unknown reason you only had the local matrix to work with.

Finding a local matrix from a world matrix

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 | import maya.OpenMaya as om import math transformFn = om.MFnTransform() # Create empty transform function set mSel = om.MSelectionList() mSel.add('pSphere1') mSel.add('pCube1') dagPath = om.MDagPath() mSel.getDagPath(0, dagPath) # get dagPath for pSphere1 worldMatrixInverseA = dagPath.inclusiveMatrixInverse() # get the world inverse matrix for pCube1 parent (pSphere1) mSel.getDagPath(1, dagPath) worldMatrixB = dagPath.inclusiveMatrix() # Get the world matrix for pCube1 localMatrixB = worldMatrixB * worldMatrixInverseA # Multiply pCube1's world matrix by it's parents world inverse matrix translation = om.MTransformationMatrix(localMatrixB).getTranslation(om.MSpace.kWorld) # <-- Not sure on this parameter. All I care about is the 4th row in the matrix. rotation = om.MTransformationMatrix(localMatrixB).eulerRotation() print '\n' print 'pCube1.translate ->', translation.x, translation.y, translation.z print 'pCube1.rotate ->', math.degrees(rotation.x), math.degrees(rotation.y), math.degrees(rotation.z) |

Labels:

api,

local matrix,

matrix,

maya,

python,

python api,

world matrix

Thursday, 15 December 2016

Bounding Box fun!

I was recently working with a colleague on a custom deformer which required access to bounding box information for input meshes. I looked into different ways of getting that data via the API and we ended up trying a few different methods which I thought might be worth sharing.

Since I only had access to kMeshData in the deformer, I couldn't create a dagPath (will talk a bit more about that later) but in terms of writing a utility script or testing the examples in the script editor, these will work just fine.

So both a transform and a mesh have bounding box information. I suppose it makes sense..but I've never really thought about it.

The bounding box of a transform relates to its children, and the bounding box of the mesh relates to its components. Since a mesh needs a transform in order to live in the scene, it can be confusing which one relates to what exactly but all we need to really know is that the values displayed in the attribute editor are in object space.

Since the mesh is a child of the transform, the bounding box co-ordinates represented on the transform will inform us of the bounding box of the mesh.

However, as I mentioned above, this will be in object space. So if the transform is parented to another transform and the object is arbitrarily positioned in the world, these values may not be of much use depending on the circumstance.

Group the polyCube, then move that group somewhere in the world. If you check the min/max values you will notice they don't change.

We can build a bounding box using the expand method and the points of the mesh. All we have to do is find the positions of each vertex in world space and add them to the bounding box object.

If we again group the polyCube and move the group somewhere in the world, this time we will have the world space co-ordinates for the min and max.

Using the expand method may be quite costly on a mesh with lots of vertices so here's a quicker way of doing it.

The important part is on line 5 and line 6 where we multiply the object-space bounding box min and max points by it's world matrix (inclusive) which transforms the points to world-space.

To make sure the intersect results are correct, you would need to make sure you build a world space bounding box object as well..

I'll leave that up to you :)

Since I only had access to kMeshData in the deformer, I couldn't create a dagPath (will talk a bit more about that later) but in terms of writing a utility script or testing the examples in the script editor, these will work just fine.

Method 1 - Using the boundingBox method of a MFnDagNode function set

The bounding box of a transform relates to its children, and the bounding box of the mesh relates to its components. Since a mesh needs a transform in order to live in the scene, it can be confusing which one relates to what exactly but all we need to really know is that the values displayed in the attribute editor are in object space.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 | import maya.cmds as cmds import maya.OpenMaya as om transform = 'pCube1' mSel = om.MSelectionList() dagPath = om.MDagPath() mSel.add( transform ) mSel.getDagPath(0, dagPath) dagFn = om.MFnDagNode(dagPath) # Returns the bounding box for the dag node in object space. boundingBox = dagFn.boundingBox() # There's a few useful methods available, including min and max # which will return the min/max values represented in the attribute editor. min = boundingBox.min() max = boundingBox.max() |

Since the mesh is a child of the transform, the bounding box co-ordinates represented on the transform will inform us of the bounding box of the mesh.

However, as I mentioned above, this will be in object space. So if the transform is parented to another transform and the object is arbitrarily positioned in the world, these values may not be of much use depending on the circumstance.

Group the polyCube, then move that group somewhere in the world. If you check the min/max values you will notice they don't change.

Method 2 - Building a bounding box from points

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 | # Using the dagPath from previous example, lets create a vertex iterator. iter = om.MItMeshVertex( dagPath ) # Note: The iterator is clever enough to know we want to work on the mesh, # even though we are passing in a dagPath for a transform boundingBox = om.MBoundingBox() while not iter.isDone(): # Get the position of the current vertex (in world space) position = iter.position(om.MSpace.kWorld) boundingBox.expand(position) # Expand the bounding box iter.next() min = boundingBox.min() max = boundingBox.max() |

If we again group the polyCube and move the group somewhere in the world, this time we will have the world space co-ordinates for the min and max.

Method 3 - Creating world-space bounding box using min/max point matrix multiplication

1 2 3 4 5 6 7 8 9 10 11 12 | dagPath.extendToShape() # Set the dagPath object to the mesh itself dagFn.setObject(dagPath) # Update the dag function set with the mesh object boundingBox = dagFn.boundingBox() # Grab the bounding box (object space) min = boundingBox.min() * dagPath.inclusiveMatrix() max = boundingBox.max() * dagPath.inclusiveMatrix() # Create new bounding box, initializing with new world-space points, min and max boundingBox = om.MBoundingBox( min, max ) min = boundingBox.min() max = boundingBox.max() |

The important part is on line 5 and line 6 where we multiply the object-space bounding box min and max points by it's world matrix (inclusive) which transforms the points to world-space.

Method 4 - transformUsing method of a MBoundingBox object

We can reduce the amount of steps further by making use of the transformUsing method. It takes a MMatrix and transforms the points for us.

1 2 3 4 5 | boundingBox = dagFn.boundingBox() boundingBox.transformUsing( dagPath.inclusiveMatrix() ) min = boundingBox.min() max = boundingBox.max() |

Bonus - Testing intersection

We can use the intersects method to check for collision with another bounding box.

1 2 3 4 5 6 7 8 9 | anotherTransform = 'pCube2' mSel.add( anotherTransform ) mSel.getDagPath(1, dagPath) dagFn.setObject( dagPath ) anotherBoundingBox = dagFn.boundingBox() # Returns a true or false print boundingBox.intersects( anotherBoundingBox ) |

To make sure the intersect results are correct, you would need to make sure you build a world space bounding box object as well..

I'll leave that up to you :)

Sunday, 16 October 2016

Stretch / Compress Deformer update

Following on from my last post, I decided to take the compress deformer a bit further.

The idea is to create two input meshes that define the stretch and compress behavior for the source mesh, so when a face is being compressed, it will look up the components of the compress mesh and use that to define where (approximately) it's points should go. Same goes for the stretch.

I ran into an annoying bug with the MFnMesh.getNormals() function. After getting weird results with the deformation, and re-checking the code several times, I decided to turn to google and see if anyone else had the same issue. Lo and behold, apparently .getNormals() returns some normals in the wrong order. One to keep in mind.

If you haven't already seen this awesome plug-in, it does this sort of thing, only much better. Seriously cool stuff. Check it out. :)

Monday, 26 September 2016

Stretch / Compression Deformer

Just a quick one this week. I saw a great video on Vimeo for a sort of stretch / compression deformer and it inspired me to try it out.

I figured it was in some way based on the area of the polygons, so as they get smaller, they push outwards along their normals.

Luckily, MItMeshPolygon has a getArea function for that (although I did compute it using the tri's and a few cross products at first...doh!).

I didn't include any stretch in this, if the area increases past it's default, it'll just do whatever it's doing, but if it gets smaller, it'll take an average of all surrounding faces and bulge, based on the painted deformer weight values.

Thanks to hippydrome.com for the model :)

I figured it was in some way based on the area of the polygons, so as they get smaller, they push outwards along their normals.

Luckily, MItMeshPolygon has a getArea function for that (although I did compute it using the tri's and a few cross products at first...doh!).

I didn't include any stretch in this, if the area increases past it's default, it'll just do whatever it's doing, but if it gets smaller, it'll take an average of all surrounding faces and bulge, based on the painted deformer weight values.

Thanks to hippydrome.com for the model :)

Friday, 16 September 2016

Collision Deformer

yay collision!

It's been a while so I thought I'd update with progress on a collision deformer I've been working on.

I must have made 4 or 5 unsuccessful attempts at this before giving up. I had a time out for a month or so and came back to it with a fresh mind. I think it helped. I finally got there in the end but there's still more that can be added.

Tuesday, 7 June 2016

Deformers!

I've been diving into the world of deformers this week and it's opening up a whole new world of pain. Especially if you want to do something more substantial than make a ripple effect...which by the way, is one of the few things you can feast your eyes upon in the demo below. It's not as if you can do the same with a standard non-linear deformer in Maya*..

I'm going to try and work on a more functional collision deformer soon, but I think the next few posts will be devoted to demonstrating some basic things you can do with the Maya Python API. All I need to do is think up some good examples! :)

* ..hey..it's all about learning!

Sunday, 29 May 2016

Surface Constraint

Following on from last week, I've been looking at how to build something similar to the geometry constraint in Maya.

It's certainly a tricky one. I've just found out about tangents and binormals which I'm still struggling to grasp but they are useful for building a somewhat stable surface based matrix..i think.

I'd like to do a bit of a write up on it soon, there's so many things I'm not too sure about.

For one thing, using MFnMesh.getClosestPointAndNormal() won't return a normal in world space if I'm getting the mesh data using inputValue.asMeshTransformed(). Is this a bug? Maybe I'm using it wrong.

Here's a quick demo.

Wednesday, 25 May 2016

Vertex Constraint aka Rivet

Just a quick update this week. I learnt some cool stuff to do with building matrixes...or matrices. Yea..matrices.

As far as I can tell thus far in my investigations, you need the following basic ingredients to start with:

1) A unit vector

2) The normalized cross product of 1) and any vector that isn't a scalar of 1) *

3) The cross product of the vector from 1) and 2). Again, needs to be normalized

4) A point

I picked up a good maths intro series from Chad Vernon to which he alluded how to build something that resembles the classic rivet constraint. It was an interesting challenge to take on as I only had some clues to go by, not a step by step guide. I'm glad I stuck with it and got something working in the end.

I've tested it on a skinned mesh with blendshapes and its doing the job so far. Yea..I can't believe it either.

Thanks http://www.hippydrome.com/ for the free model.

* I wonder what happens when you take the cross product of a and -a...

As far as I can tell thus far in my investigations, you need the following basic ingredients to start with:

1) A unit vector

2) The normalized cross product of 1) and any vector that isn't a scalar of 1) *

3) The cross product of the vector from 1) and 2). Again, needs to be normalized

4) A point

I picked up a good maths intro series from Chad Vernon to which he alluded how to build something that resembles the classic rivet constraint. It was an interesting challenge to take on as I only had some clues to go by, not a step by step guide. I'm glad I stuck with it and got something working in the end.

I've tested it on a skinned mesh with blendshapes and its doing the job so far. Yea..I can't believe it either.

Thanks http://www.hippydrome.com/ for the free model.

* I wonder what happens when you take the cross product of a and -a...

Friday, 20 May 2016

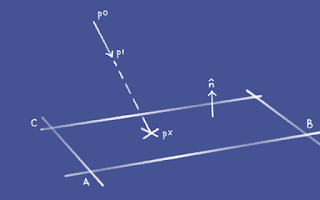

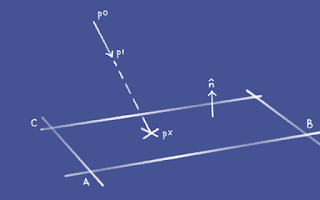

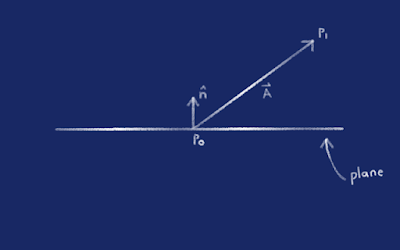

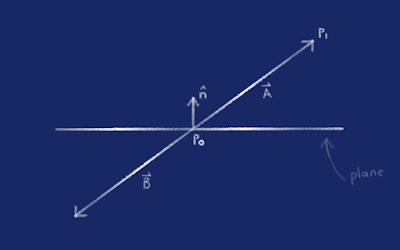

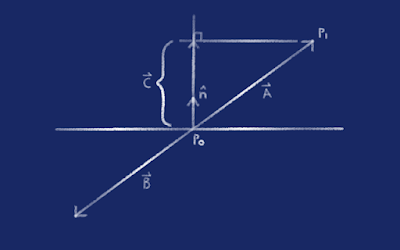

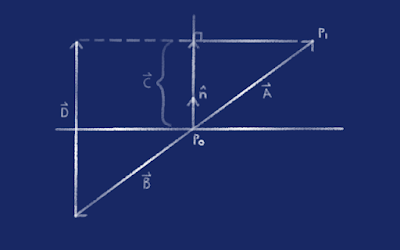

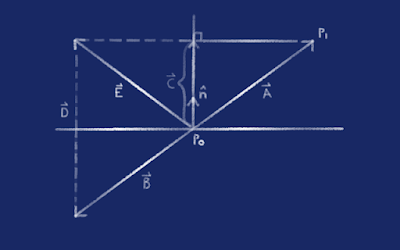

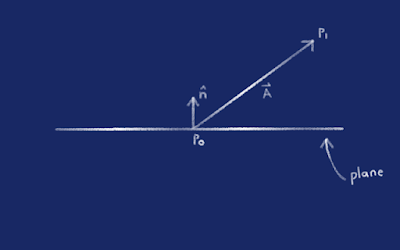

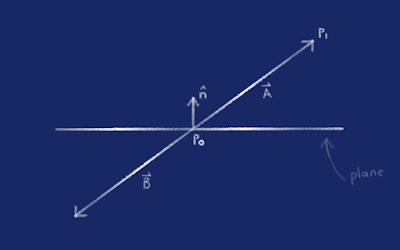

Line Plane Intersection

I went with line plane intersection this time around, for a couple of reasons.

First off, there's some excellent info on it already, This one really solidified it for me because it broke the process down step by step.

Having said that, these sorts of topics can be difficult to look at on paper sometimes..I mean, how does one go about visualizing the equation for a plane?

Anyway, on to it. I built this node in C++ using one of the examples in the devkit as a template. Surprisingly, there isn't too much manual work if it's a relatively simple compute and starting from a template makes things much easier. I think I spent the majority of the time learning the syntax for different input attributes.

As there is already so much good info out there for this topic, I'll just go over the first couple of steps of how I got the normal of the plane, without using any of the convenience methods in MFnMesh, MItGeometry etc.

---------------------------------------------

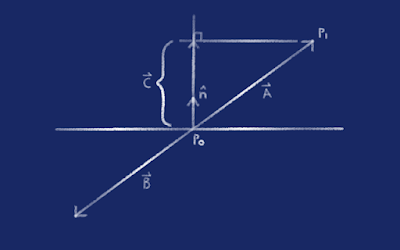

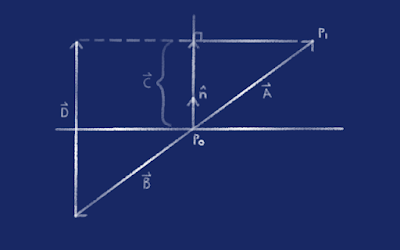

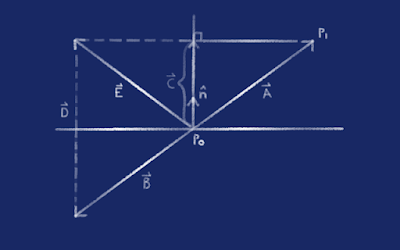

First off, we're looking for pX. The point of intersection with the plane if we were to extend the vector from p0 to p1. Think of it kinda like shining a torch onto the floor, and you're aiming the torch 5 metres in front of you. You can roughly gauge where the light will hit, but where exactly is it going to hit? Using this example, p0 is the butt* of the torch and p1 is the light cone or front of the torch.

To start off with, we need to get the normal of the plane, a vector perpendicular to the plane. It's labelled n in the picture above.

Imagine the plane as a polygon that covers a floor, and the points A, B & C as the corner points. The floor might have a fourth point (corner) or more, but we only need three points to define a plane.

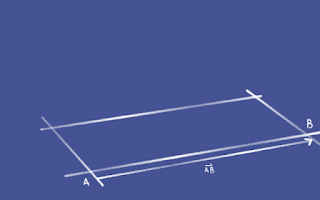

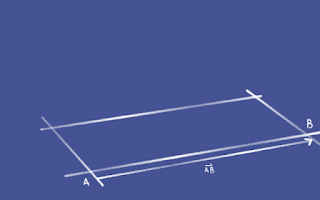

Get the vector from A to B.

AB = B - A

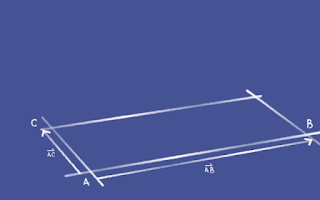

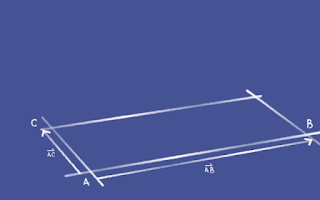

Now get the vector from A to C.

AC = C - A

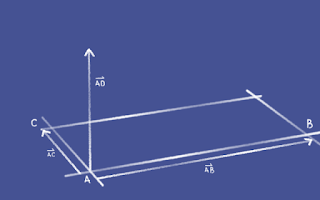

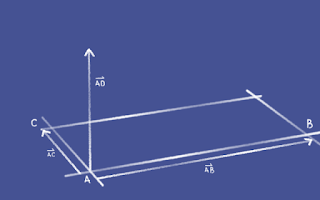

Take the cross product of AB and AC. This will give you a vector perpendicular to both AB and AC.

It matters which way round you do this as there are actually two vectors perpendicular to the plane (think towards the ceiling and down through the floor..).

AD = AC x AB

Now we have our vector AD, it's just a case of turning it into a unit vector, represented as n in the first image.

Check out the tutorials I've linked to at the start to go from here.

Here's a quick demo of the C++ node in action.

Use case example: Finding a position for a pole vector

I re-built it in Python to take in the three points we need to define a plane. If I connect up the three joints of an arm or leg we can find a position for a pole vector that won't offset the joints when applied.

I'll admit, it's probably not the best way to go about building a pole vector but still, I think it's good insight to how the pole vector stuff works.

Downloads:

Plugin (Python)

* heh

---------------------------------------------

First off, we're looking for pX. The point of intersection with the plane if we were to extend the vector from p0 to p1. Think of it kinda like shining a torch onto the floor, and you're aiming the torch 5 metres in front of you. You can roughly gauge where the light will hit, but where exactly is it going to hit? Using this example, p0 is the butt* of the torch and p1 is the light cone or front of the torch.

To start off with, we need to get the normal of the plane, a vector perpendicular to the plane. It's labelled n in the picture above.

Imagine the plane as a polygon that covers a floor, and the points A, B & C as the corner points. The floor might have a fourth point (corner) or more, but we only need three points to define a plane.

Get the vector from A to B.

AB = B - A

Now get the vector from A to C.

AC = C - A

Take the cross product of AB and AC. This will give you a vector perpendicular to both AB and AC.

It matters which way round you do this as there are actually two vectors perpendicular to the plane (think towards the ceiling and down through the floor..).

AD = AC x AB

Now we have our vector AD, it's just a case of turning it into a unit vector, represented as n in the first image.

Check out the tutorials I've linked to at the start to go from here.

Here's a quick demo of the C++ node in action.

Use case example: Finding a position for a pole vector

I re-built it in Python to take in the three points we need to define a plane. If I connect up the three joints of an arm or leg we can find a position for a pole vector that won't offset the joints when applied.

I'll admit, it's probably not the best way to go about building a pole vector but still, I think it's good insight to how the pole vector stuff works.

Downloads:

Plugin (Python)

* heh

Tuesday, 10 May 2016

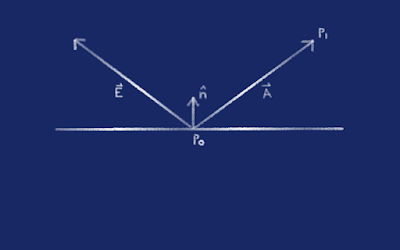

Reflecting a Vector

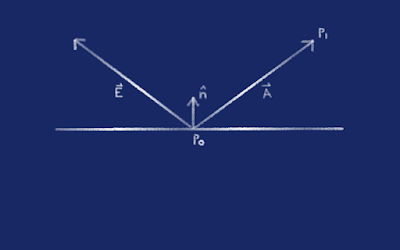

As you can probably guess, I went with learning about reflecting a vector as it hits a point on a plane.

I like to visualize it as shining a laser pen onto a mirror. cool eh?

There's a few calculations we can skip if the plane is static, but it'd be kinda cool to move the plane around and see what the reflection would be. Here's how I went about it:

Get the vector A.

--------

Reverse the Vector A by multiplying it by -1 and add it to P0.

--------

Project A onto the normal n, using the dot product. There's plenty of resources online if you're not sure what the dot product does.

--------

Multiply C by 2 and add to the vector B.

--------

We can find the vector E by subtracting the end point of vector D from P0.

--------

Final reflected vector.

Here's a quick demo. :D

Downloads:

Scene file setup with standard math nodes.

Plugin (Python)

If you check out the python script, you'll notice I'm not using the plane normal. I could have probably used a mesh function set to get a face or vertex normal but I cheated a bit and used the Y basis vector from the worldMatrix of the plane transform.

What happens if the plane has multiple faces, which normal do we choose?

I like to visualize it as shining a laser pen onto a mirror. cool eh?

There's tons of examples around so I worked my way through them and tried building something similar using expressions or vanilla Maya nodes. When I got something working I built a custom node.

The blue locator represents the source vector A, the plane has the normal n and the point P. The resulting reflected vector B, is the red locator.

The blue locator represents the source vector A, the plane has the normal n and the point P. The resulting reflected vector B, is the red locator.

There's a few calculations we can skip if the plane is static, but it'd be kinda cool to move the plane around and see what the reflection would be. Here's how I went about it:

Get the vector A.

A = P1 - P0

--------

Reverse the Vector A by multiplying it by -1 and add it to P0.

B = ( -1 * A ) + P0

--------

Project A onto the normal n, using the dot product. There's plenty of resources online if you're not sure what the dot product does.

C = ( A • n ) * n

--------

Multiply C by 2 and add to the vector B.

D = ( 2 * C ) + B

--------

We can find the vector E by subtracting the end point of vector D from P0.

--------

Final reflected vector.

Here's a quick demo. :D

Downloads:

Scene file setup with standard math nodes.

Plugin (Python)

If you check out the python script, you'll notice I'm not using the plane normal. I could have probably used a mesh function set to get a face or vertex normal but I cheated a bit and used the Y basis vector from the worldMatrix of the plane transform.

What happens if the plane has multiple faces, which normal do we choose?

Obligatory first post!

At the start of the year I set myself the insurmountable task of getting to grips with the Maya API. I thought a blog would be a great way to record my progress* and share what I learn*.

Anyway, I'll give it a go. Along the way I'll be attempting to brush up on my maths by way of vectors and all that good stuff.

*living hell

*die from

*die from

Subscribe to:

Comments (Atom)